Technical Challenge: Checking For Overlap in a Series of Ranges

Introduction

I recently stumbled across a question that seemed fairly innocent at first. Something like:

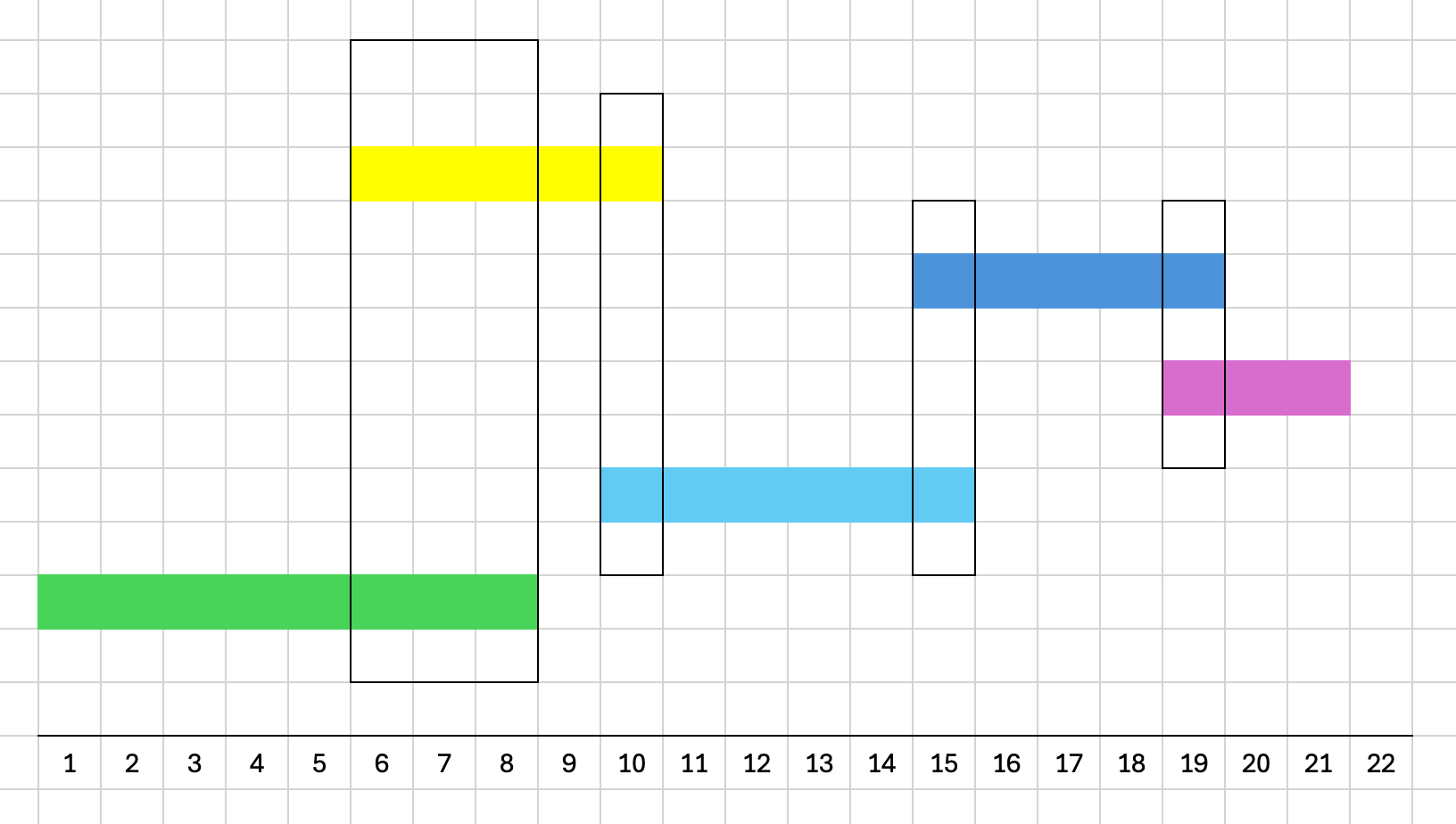

Given 3-5 price ranges, validate that none of them overlap.

It wasn't immediately clear to me why this would ever be a validation step that would be required, but that's not important here. For our sake, we can assume that our ranges are given in the form of:

$ranges = [

[0, 5],

[8, 13],

[18, 20],

];And we can assume that:

- All values are always integers

- The first number in a range is always smaller than the second

- Ranges are not necessarily in sorted order.

Given the low quantity of ranges, a pretty naive approach solves the problem for almost all implementations. Something like:

for ($i = 0; $i < $count; $i++) {

for ($j = $i + 1; $j < $count; $j++) {

if ($ranges[$i][0] <= $ranges[$j][1] && $ranges[$j][0] <= $tanges[$i][1])) {

$overlaps[] = [$ranges[$i], $ranges[$j]];

}

}

}We're calling this one the Naive Scanner

And that's it, that's the challenge.

However since then, it's been playing in my head and I've been wondering if there's a better way. What if the 3-5 price range was removed? If we expand the number of inputs and come up with a better implementation, do we see the same improvements with a small quantity of inputs?

It's been a while since I had a play with phpbench/phpbench, so let's dig into this a bit.

Problem Statement

Since this is an expansion on a question that's been running around in my brain for a few days, we need to come up with the constraints of the problem we're trying to solve for. This isn't how these questions usually happen, but in my head we want to solve for:

Given n ranges of integers, write a method that scans a list of ranges and determines if any of the ranges overlap.

As a reminder, our list of assumptions from above:

- All values are always integers

- The first number in a range is always smaller than the second

- Ranges are not necessarily in sorted order.

I don't think it needs to be any more complicated than that, but seeing as we control the problem and the solution this time we're going to need to put together some generators to confirm our approach a little later on. Since our problem statement only cares about if there is an overlap and not how many overlaps there are (keeping in the spirit of the validation nature of the original question), we need to be able to control the number overlaps being generated as well.

Therefore instead of being able to rely on a bunch of random numbers thrown together, it's best if we use a cumulative sum to work our way through.

class ArrayRangesGenerator

{

const int MIN_STEP_SIZE = 1;

const int MAX_STEP_SIZE = 10;

public static function generate(int $count, bool $overlap = false): array

{

$ranges = [];

$counter = 0;

if ($overlap) {

$count--;

}

for ($i = 0; $i < $count; $i++) {

$start = $counter += self::getRandomNumber();

$end = $counter += self::getRandomNumber();

$ranges[] = [$start, $end];

}

if ($overlap) {

$pluck = $ranges[array_rand($ranges)];

$ranges[] = [$pluck[0] - self::getRandomNumber(), $pluck[1] + self::getRandomNumber()];

}

return $ranges;

}

private static function getRandomNumber(): int

{

return rand(self::MIN_STEP_SIZE, self::MAX_STEP_SIZE);

}

}This should generate us any number of ranges we want in an array, which we can use for inputs.

Which we can benchmark to establish a baseline of how long our setup takes:

Subjects: 2, Assertions: 0, Failures: 0, Errors: 0

+------+-------------------------------+-----------------------------+-----+------+------------+----------+--------------+----------------+

| iter | benchmark | subject | set | revs | mem_peak | time_avg | comp_z_value | comp_deviation |

+------+-------------------------------+-----------------------------+-----+------+------------+----------+--------------+----------------+

| 0 | ArrayRangesGeneratorBenchmark | benchGenerateWithOverlap | | 100 | 2,009,448b | 99.340μs | +0.00σ | +0.00% |

| 0 | ArrayRangesGeneratorBenchmark | benchGenerateWithoutOverlap | | 100 | 2,009,448b | 91.480μs | +0.00σ | +0.00% |

+------+-------------------------------+-----------------------------+-----+------+------------+----------+--------------+----------------+All benchmarks in this post are running on a MacBook Air M2 that's also running other tasks at the same time. Your results may vary, but I'd love to hear if you see something wildly different.

Roughly 91 microseconds for our test data with no overlaps, and an extra 8 microseconds to add in an overlapping range.

Naive Scanner

Returning to our basic implementation from above, we should benchmark it to establish the "baseline" we want to try and beat. Using phpbench as above, with the same array sizes (1000 values) and 1000 revisions we get:

+------+-------------------------------+-----------------------------+-----+------+------------+--------------+--------------+----------------+

| iter | benchmark | subject | set | revs | mem_peak | time_avg | comp_z_value | comp_deviation |

+------+-------------------------------+-----------------------------+-----+------+------------+--------------+--------------+----------------+

| 0 | NaiveScannerBenchmark | benchScanWithOverlap | | 1000 | 2,009,416b | 12,150.745μs | +0.00σ | +0.00% |

| 0 | NaiveScannerBenchmark | benchScanWithoutOverlap | | 1000 | 2,009,416b | 17,598.962μs | +0.00σ | +0.00% |

+------+-------------------------------+-----------------------------+-----+------+------------+--------------+--------------+----------------+Much bigger numbers to work with, and a much bigger difference between the two than I was expecting.

Because we know that the overlapping range is at the very end of the array, I'm going to ignore the difference and assume it's due to the return value and returning the method call instead of throwing the exception. It it remains an outlier later we can revisit.

For now though, we have a score. What can we do to beat it?

Sort and Search

What if we sorted the set of ranges first?

This would mean that we only have to loop through the entire array twice instead of nesting loops, and each comparison is only one check instead of potentially 2.

Since we're operating with ranges and ultimately checking for overlaps, we can sort the ranges based on either ending value for the sake of running comparison.

Something like:

usort($ranges, function ($a, $b) {

return $a[0] <=> $b[0];

});

$previousEnd = null;

foreach ($ranges as $range) {

if ($previousEnd !== null && $range[0] <= $previousEnd) {

throw new \RuntimeException("Overlapping ranges detected.");

}

$previousEnd = $range[1];

}

return true;An excellent use case for the underutilized spaceship operator!

Covers all our use cases - sorts the array, and then loops through it an additional time to check for overlaps. How does it perform?

+------+-------------------------------+-------------------------+-----+------+------------+-----------+--------------+----------------+

| iter | benchmark | subject | set | revs | mem_peak | time_avg | comp_z_value | comp_deviation |

+------+-------------------------------+-------------------------+-----+------+------------+-----------+--------------+----------------+

| 0 | SortAndSearchScannerBenchmark | benchScanWithOverlap | | 1000 | 2,009,432b | 341.254μs | +0.00σ | +0.00% |

| 0 | SortAndSearchScannerBenchmark | benchScanWithoutOverlap | | 1000 | 2,009,432b | 292.968μs | +0.00σ | +0.00% |

+------+-------------------------------+-------------------------+-----+------+------------+-----------+--------------+----------------+

That's significantly better

A much lower number!

"Well that's not fair, the array was basically sorted before hand"

You're right, that's not fair. In order to benchmark this properly we need to add in a more randomized set of data, which means a new generator. I still want to keep to only having 1 overlap though (to avoid issues with early exits when the overlap is towards the front) so what if we "unsorted" the array we're already generating and created a new dataset from PHP's shuffle()?

Our old NaiveScanner:

+------+-----------------------+-----------------------------------------+-----+------+------------+--------------+--------------+----------------+

| iter | benchmark | subject | set | revs | mem_peak | time_avg | comp_z_value | comp_deviation |

+------+-----------------------+-----------------------------------------+-----+------+------------+--------------+--------------+----------------+

| 0 | NaiveScannerBenchmark | benchScanWithOverlap | | 1000 | 2,009,416b | 11,840.677μs | +0.00σ | +0.00% |

| 0 | NaiveScannerBenchmark | benchScanWithoutOverlap | | 1000 | 2,009,416b | 17,763.339μs | +0.00σ | +0.00% |

| 0 | NaiveScannerBenchmark | benchScanRandomizedRangesWithOverlap | | 1000 | 2,009,448b | 7,439.978μs | +0.00σ | +0.00% |

| 0 | NaiveScannerBenchmark | benchScanRandomizedRangesWithoutOverlap | | 1000 | 2,009,448b | 18,490.874μs | +0.00σ | +0.00% |

+------+-----------------------+-----------------------------------------+-----+------+------------+--------------+--------------+----------------+Faster on unsorted data makes sense, since the overlap and exception can happen earlier in the loop now

Compared to our SortAndSearch implementation:

+------+-------------------------------+-----------------------------------------+-----+------+------------+-----------+--------------+----------------+

| iter | benchmark | subject | set | revs | mem_peak | time_avg | comp_z_value | comp_deviation |

+------+-------------------------------+-----------------------------------------+-----+------+------------+-----------+--------------+----------------+

| 0 | SortAndSearchScannerBenchmark | benchScanWithOverlap | | 1000 | 2,009,432b | 339.501μs | +0.00σ | +0.00% |

| 0 | SortAndSearchScannerBenchmark | benchScanWithoutOverlap | | 1000 | 2,009,432b | 299.033μs | +0.00σ | +0.00% |

| 0 | SortAndSearchScannerBenchmark | benchScanRandomizedRangesWithOverlap | | 1000 | 2,009,464b | 411.239μs | +0.00σ | +0.00% |

| 0 | SortAndSearchScannerBenchmark | benchScanRandomizedRangesWithoutOverlap | | 1000 | 2,009,464b | 420.104μs | +0.00σ | +0.00% |

+------+-------------------------------+-----------------------------------------+-----+------+------------+-----------+--------------+----------------+Still a clear winner, nice.

Which is still a significant improvement over our original NaiveScanner implementation.

Without digging into more advanced benchmarking setups though, I wonder if attempting to do both the sort and the search in the same loop would be more perform better?

Single Sort And Search Loop

What if we split the difference between the two methods? Can we sort, and check only the neighbouring ranges in the sorted list as new entries are added?

Will trying to implement a hybrid of the two existing methods result in results somewhere in the middle? Probably, but let's give it a go.

Source code on this one is a bit long, so jump ahead and view the final implementation here: https://github.com/tharbakim/overlapping-ranges

+------+----------------------------------+-----------------------------------------+-----+------+------------+-------------+--------------+----------------+

| iter | benchmark | subject | set | revs | mem_peak | time_avg | comp_z_value | comp_deviation |

+------+----------------------------------+-----------------------------------------+-----+------+------------+-------------+--------------+----------------+

| 0 | SingleSortAndSearchLoopBenchmark | benchScanWithOverlap | | 1000 | 2,009,432b | 2,017.878μs | +0.00σ | +0.00% |

| 0 | SingleSortAndSearchLoopBenchmark | benchScanWithoutOverlap | | 1000 | 2,009,432b | 2,037.029μs | +0.00σ | +0.00% |

| 0 | SingleSortAndSearchLoopBenchmark | benchScanRandomizedRangesWithOverlap | | 1000 | 2,009,464b | 1,359.887μs | +0.00σ | +0.00% |

| 0 | SingleSortAndSearchLoopBenchmark | benchScanRandomizedRangesWithoutOverlap | | 1000 | 2,009,464b | 3,099.145μs | +0.00σ | +0.00% |

+------+----------------------------------+-----------------------------------------+-----+------+------------+-------------+--------------+----------------+Yup, lands itself solidly in second place.

As expected, that's somewhere between the first and second implementations.

Conclusion

As we should all expect, searching sorted data is much faster than searching unsorted data. Sorting and then only needing to compare against the neighbouring entries is also much faster than needing to compare against every possible entry.

Still, it's nice to put to rest the question that has been rolling around in my head and confirm that the initial naive approach might be fine for the original restriction of 3-5 values, but sorting the values first makes for a much better performing validation.

To see the benchmarks run for all the approaches together, with varying revs and iterations click on through to the repository: https://github.com/tharbakim/overlapping-ranges